How to use wget in Linux

Written by Guillermo Garron

Date: 2020-03-29 17:15:00 00:00

Overview

wget is part of the command line tools in Linux, and it is used to download files from the Internet, using different protocols, it can download one file, lots of files, work in batchs and a lot more.

Syntax

The command syntax is:

wget [options] [url]

You can use it with no options, and pointing to an specific file, and will download it, to the current folder in your Linux machine.

Examples

Donwload just one file

If you want to download just one file, get the url of the file, if it is a picture, you can righ-click on the image the copy the url, then use it with the wget command.

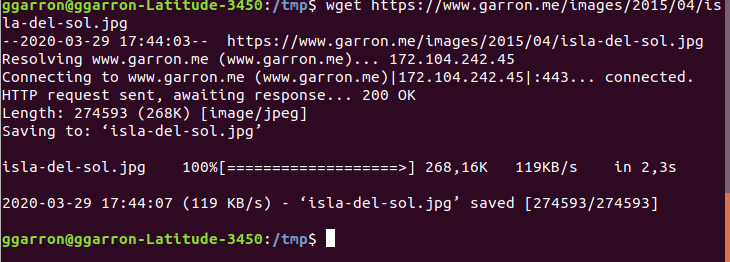

wget https://www.garron.me/images/2015/04/isla-del-sol.jpg

As you can see in the image, it just download the file to the folder /tmp, because that was the current working folder

Specify the folder where to save the downloaded file

If you want to save the file in a differente folder and not in the current working folder, use this option.

wget -P /home/user https://www.garron.me/images/2015/04/isla-del-sol.jpg

Save the file with a different name

wget -O another-name.jpg https://www.garron.me/images/2015/04/isla-del-sol.jpg

Limit the bandwidth

You can force wget to use just part of the available bandwidth

wget --limit-rate=20k http://www.site.com

Resume interrupted download

If for any reason the file you are downloading can't be fully donwloaded, you can resume where it was once you have Internet connection again, this is possible only if the origin server supports resuming

wget -c https://www.garron.me/images/2015/04/isla-del-sol.jpg

Download files in a batch

You need to create a file with a list of urls you want to download, something like this:

https://www.garron.me/en/pictures/new-year-fireworks/new-year-eve-fireworks-19.jpg

https://www.garron.me/en/pictures/new-year-fireworks/new-year-eve-fireworks-04.jpg

https://www.garron.me/en/pictures/new-year-fireworks/new-year-eve-fireworks-02.jpg

Put those lines (one url per line) in a plain text file, and then run this command.

wget -i files-to-download.txt

Create a local copy of a site for local browsing

If you want to make a local copy of some site to have it on your disk to browse it offline run this command.

wget -m -k -E -p -np https://www.garron.me/

- -m Makes a mirror copy of the site

- -k Convert all links to relative, so they can be used locally

- -E Add extensions to extensionless files in order to work, some pretty uri sites does not show the extension of the files

- -p Download all page requisites like jss css files, otherwise the site will not work properly

- -np Limits the download to only go down in folders, does not follow links to upper folders, if you want to download just a portion of the site this comes handy.

More tips

Some years ago, I have written about wget command, with some other tips, find them here

Conclusion

wget is a very usefull command, there a lot more you can do, read the man page for more options.