Nginx+Varnish compared to Nginx

Written by Guillermo Garron

Date: 2011-04-22 10:36:30 00:00

Introduction

While checking the referrals to my page, I have found that one visitor came through google with the "why use varnish with nginx"; keyword, these days I've been experimenting with both Nginx and Varnish, in front of my Apache server with a Drupal application.

So, I decided to write a little about what I've found.

If you do not know about Nginx or Varnish, here is a small introduction:

From Wikipedia:

Varnish is an HTTP accelerator designed for content-heavy dynamic web sites. In contrast to other HTTP accelerators, such as Squid, which began life as a client-side cache, or Apache, which is primarily an origin server, Varnish was designed from the ground up as an HTTP accelerator.

And this is really important about Varnish, and makes it really efficient working together with the Linux Kernel:

Varnish stores data in virtual memory and leaves the task of deciding what is stored in memory and what gets paged out to disk to the operating system. This helps avoid the situation where the operating system starts caching data while they are moved to disk by the application.

And this, makes it efficient in CPU use:

Furthermore, Varnish is heavily threaded, with each client connection being handled by a separate worker thread. When the configured limit on the number of active worker threads is reached, incoming connections are placed in an overflow queue; only when this queue reaches its configured limit will incoming connections be rejected.

Now about Nginx:

nginx (pronounced ?engine-x?) is a lightweight, high-performance Web server/reverse proxy and e-mail (IMAP/POP3) proxy, licensed under a BSD-like license. It runs on Unix, Linux, BSD variants, Mac OS X, Solaris, and Microsoft Windows.

Nginx uses an asynchronous event-driven approach to handling requests which provides more predictable performance under load, in contrast to the Apache HTTP server model that uses a threaded or process-oriented approach to handling requests.

Here is one of its features:

- Reverse proxy with caching.

Hence, Varnish and Nginx (working as a reverse proxy) can be somehow compared.

Using Nginx as an HTTP accelerator

A lot of users are now using Nginx as proxy in front of Apache, and Nginx will cache the pages as it got from Apache and serve them to future users while the resource is still valid.

The cache honors backend's "Expires", "Cache-Control: no-cache", and "Cache-Control: max-age=XXX" headers since version 0.7.48. Since version 7.66, "private" and "no-store" are also honored. nginx does not handle "Vary" headers when caching. In order to ensure private items are not served to all users unintentionally by the cache, the back-end can set "no-cache" or "max-age=0", or the proxy cache key must include user-specific data such as $cookie_ xxx. However, using cookie values as part of proxy cache key can defeat the benefits of caching for public items, so separate locations with different proxy cache key values might be necessary to separate private and public items.

And here is a small configuration example from: serverfault

http {

proxy_cache_path /var/www/cache levels=1:2 keys_zone=my-cache:8m max_size=1000m inactive=600m;

proxy_temp_path /var/www/cache/tmp;

server {

location / {

proxy_pass http://example.net;

proxy_cache my-cache;

proxy_cache_valid 200 302 60m;

proxy_cache_valid 404 1m;

}

}

}

Nginx is not purely a HTTP proxy/accelerator, but a Web server that can be used as a reverse proxy with cache capabilities.

Using Varnish

Another option you may have is to use varnish. Varnish was designed from the beginning as a HTTP accelerator, and as far as I know is the only task it can perform, and it does it really well.

Configuration is really easy and usually works out of the box, anyway it is also very, very customizable, and you can change its behaviour a lot, it uses some kind of perl/C language, so if you are a programmer, you can do wonders with it.

You can delete cookies, set cookies, change the Headers that the backend assigned to the files served, a lot more, here you will find a lot of examples of Varnish configuration, and read also this varnish introduction to configuration

Some testing

The scenario

I've this scenario Apache+MySQL+PHP+Drupal+boost, for those not familiar with boost, it is like SuperCache from Wordpress, it basically create static (.html) files out of the pages that drupal generates.

As you may see in this test both Nginx and Varnish will be serving static files, those created by boost, so in the same conditions, with this test I'm trying to answer the question "When to use Varnish with Nginx?".

In case this is not yet clear (I can bet it is not), I'll explain it better

Test 1

Static Files ---> Nginx ---> Varnish ---> Final user

Test 2

Static Files ---> Nginx ---> Final user

Test method

I've use Apache's ab tool and httperf to perform these tests.

These were the commands:

ab -kc 500 -n 10000 http://10.1.1.1/

And

httperf --hog --server=10.1.1.1 --wsess=2000,10,2 --rate 300 --timeout 5

Both issued from 10.1.1.2 on the same LAN.

I know this is not a real life test, and at most it can test how both systems would react under a digg or slashdot front page.

The results

With ab using varnish in front of Nginx

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking www.go2linux.org (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.0.0

Server Hostname: 10.1.1.1

Server Port: 80

Document Path: /

Document Length: 26362 bytes

Concurrency Level: 500

Time taken for tests: 44.919 seconds

Complete requests: 10000

Failed requests: 40

(Connect: 0, Receive: 0, Length: 39, Exceptions: 1)

Write errors: 0

Keep-Alive requests: 9961

Total transferred: 267744926 bytes

HTML transferred: 263938086 bytes

Requests per second: 222.62 [#/sec] (mean)

Time per request: 2245.958 [ms] (mean)

Time per request: 4.492 [ms] (mean, across all concurrent requests)

Transfer rate: 5820.89 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 5 70.1 0 3849

Processing: 0 2183 1213.6 1850 18296

Waiting: 0 1214 561.9 1300 4248

Total: 0 2187 1224.2 1850 18312

Percentage of the requests served within a certain time (ms)

50% 1850

66% 2200

75% 2650

80% 2850

90% 3348

95% 3799

98% 5904

99% 6214

100% 18312 (longest request)

The CPU load server during the tests went from 0.00 to 0.12 and Varnish was using 7% of the 768M of RAM

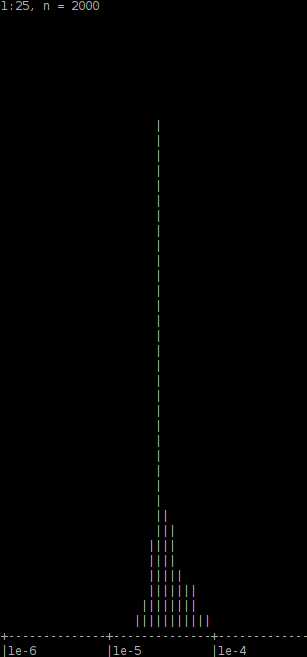

Here is a sample of the varnishhist during the test

With ab directly to NGINX

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking www.go2linux.org (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx/1.0.0

Server Hostname: 10.1.1.1

Server Port: 80

Document Path: /

Document Length: 26362 bytes

Concurrency Level: 500

Time taken for tests: 44.219 seconds

Complete requests: 10000

Failed requests: 130

(Connect: 0, Receive: 0, Length: 130, Exceptions: 0)

Write errors: 0

Non-2xx responses: 130

Keep-Alive requests: 9870

Total transferred: 268179303 bytes

HTML transferred: 264475966 bytes

Requests per second: 226.15 [#/sec] (mean)

Time per request: 2210.965 [ms] (mean)

Time per request: 4.422 [ms] (mean, across all concurrent requests)

Transfer rate: 5922.61 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 27 280.7 0 3649

Processing: 53 2066 2231.4 1348 20751

Waiting: 11 722 524.5 651 8697

Total: 71 2094 2277.4 1348 20768

Percentage of the requests served within a certain time (ms)

50% 1348

66% 1351

75% 1950

80% 2000

90% 3801

95% 7249

98% 10998

99% 13001

100% 20768 (longest request)

The CPU load went from 0.00 to 0.04, so less CPU than varnish.

Testing httperf

Varnish in front of Nginx

Maximum connect burst length: 11

Total: connections 1439 requests 10081 replies 9581 test-duration 46.890 s

Connection rate: 30.7 conn/s (32.6 ms/conn, <=1022 concurrent connections)

Connection time [ms]: min 5250.8 avg 26105.0 max 41659.1 median 30597.5 stddev 11292.6

Connection time [ms]: connect 621.9

Connection length [replies/conn]: 6.790

Request rate: 215.0 req/s (4.7 ms/req)

Request size [B]: 71.0

Reply rate [replies/s]: min 124.4 avg 212.8 max 252.6 stddev 42.2 (9 samples)

Reply time [ms]: response 568.8 transfer 1343.6

Reply size [B]: header 363.0 content 26274.0 footer 0.0 (total 26637.0)

Reply status: 1xx=0 2xx=9581 3xx=0 4xx=0 5xx=0

CPU time [s]: user 2.47 system 44.41 (user 5.3% system 94.7% total 100.0%)

Net I/O: 5330.5 KB/s (43.7*10^6 bps)

Errors: total 1480 client-timo 81 socket-timo 0 connrefused 0 connreset 419

Errors: fd-unavail 980 addrunavail 0 ftab-full 0 other 0

Session rate [sess/s]: min 0.00 avg 20.03 max 94.01 stddev 39.55 (939/2000)

Session: avg 1.41 connections/session

Session lifetime [s]: 38.1

Session failtime [s]: 1.2

Session length histogram: 979 10 52 11 3 4 2 0 0 0 939

Nginx alone, serving static files

Maximum connect burst length: 25

Total: connections 1529 requests 10167 replies 9570 test-duration 46.361 s

Connection rate: 33.0 conn/s (30.3 ms/conn, <=1022 concurrent connections)

Connection time [ms]: min 2007.5 avg 24596.6 max 43647.4 median 33599.5 stddev 15689.1

Connection time [ms]: connect 512.1

Connection length [replies/conn]: 6.309

Request rate: 219.3 req/s (4.6 ms/req)

Request size [B]: 72.0

Reply rate [replies/s]: min 150.6 avg 212.5 max 238.4 stddev 29.2 (9 samples)

Reply time [ms]: response 464.2 transfer 1495.6

Reply size [B]: header 369.0 content 26274.0 footer 0.0 (total 26643.0)

Reply status: 1xx=0 2xx=9570 3xx=0 4xx=0 5xx=0

CPU time [s]: user 2.16 system 44.19 (user 4.7% system 95.3% total 100.0%)

Net I/O: 5386.3 KB/s (44.1*10^6 bps)

Errors: total 1580 client-timo 90 socket-timo 0 connrefused 0 connreset 510

Errors: fd-unavail 980 addrunavail 0 ftab-full 0 other 0

Session rate [sess/s]: min 0.00 avg 20.04 max 108.61 stddev 40.80 (929/2000)

Session: avg 1.48 connections/session

Session lifetime [s]: 38.5

Session failtime [s]: 1.5

Session length histogram: 958 37 31 19 14 6 4 2 0 0 929

Conclusions

As you can see from these test, if you are working with Nginx serving static files, it gives you no advantage to put Varnish in front of it, of course you might want to consider that if you are working on a VPS server and you are a little concerned about latency because of a busy disk, and your whole application / web pages, fit into your RAM (You can add it to your VPS), it my improve the response time, having Varnish with -s malloc option in front of NGINX, as almost everything will be served from RAM and no, or very little access to a shared disk will be needed.

Another option where you want to have Varnish in front of NGINX, is if it is doing FastCGI, it does not matter if by itself or sending requests to Apache, but once again, you can turn Cache option in NGINX if sending PHP requirements to Apache.

The best option you have is to test all possible configuration "live" with your application, and see which of them make the most of your hardware, also consider than if you need a lot of tweaking about what is cached and what is not Varnish is a lot more flexible than NGINX.

To finish this, the conclusion should be your own, only running your own tests, as no test scenario will fulfil your needs, but your own "live" lab.

Be careful, and backup everything before starting to work with your "live" application.